Like the json_extract function, this function returns a Splunk software native type value from a piece of JSON. However, for most debugging scenarios, creating a launch configuration file is beneficial because it allows you to configure and save debugging setup details. VS Code keeps debugging configuration information in a launch.json file located in a .vscode folder in your workspace or in your user settings or workspace settings.

You likely won't be creating your own .json files but procuring them from other sources. You can check out these resources to learn about converting other data structures to JSON. The most common problem is that you did not set up launch.json or there is a syntax error in that file. Alternatively, you might need to open a folder, since no-folder debugging does not support launch configurations. VS Code makes commonly used paths and other values available as variables and supports variable substitution inside strings in launch.json. This means that you do not have to use absolute paths in debug configurations.

For example, $ gives the root path of a workspace folder, $ the file open in the active editor, and $ the environment variable 'Name'. You can see a full list of predefined variables in the Variables Reference or by invoking IntelliSense inside the launch.json string attributes. In addition to the built-in fs module, several popular third-party packages such as jsonfile, fs-extra, and bfj exist.

They have additional utility functions that make working with JSON files a breeze. On the flip side, you should evaluate the limitations of adding third-party packages to your application. JSON is one of the most common types of data you'll work with in Node, and being able to read and write JSON files is very useful. You've learned how to use fs.readFile and fs.writeFile to asynchronously work with the filesystem, as well as how to parse data to and from JSON format, and catch errors from JSON.parse. When you want to store data between server restarts with Node, JSON files are a simple and convenient choice. Whether you are reading a config file or persisting data for your application, Node has some built in utilities that make it easy to read and write JSON files.

Using JSON files in your app can be a useful way to persist data. We will look at a few different methods for working with JSON files. The JSON format is commonly used for storing data and for configuration files. IntelliJ IDEA helps you work with JSON files — it checks their syntax and formatting.

You can also use custom JSON Schemas to enable code completion in your JSON files and validate them. The json_extract_exact function treats strings for key extraction literally. This means that the function does not support explicitly nested paths.

You can set paths with nested json_array/json_object function calls. Appending arrays as single elements separates json_append from json_extend, a similar function that flattens arrays and objects into separate elements as it appends them. When json_extend takes the example in the preceding paragraph, it returns ["a", "b", "c", "d", "e", "f"]. As of jQuery 1.5, all of jQuery's Ajax methods return a superset of the XMLHTTPRequest object. This jQuery XHR object, or "jqXHR," returned by $.getJSON() implements the Promise interface, giving it all the properties, methods, and behavior of a Promise . For information about the arguments this function receives, see the jqXHR Object section of the $.ajax() documentation.

How should you go about selecting a connector to trial? The first factor was "easy to build and generate an uber jar file" so I could upload it to the AWS S3 bucket to get it into the Instaclustr Managed Kafka Connect cluster I was using. The second factor relates to how the connectors map the data from the Kafka topic to the PostgreSQL database, tables, and columns, i.e. the data mappings.

Each object has a set list of properties, that can be of basic types, like number or string, arrays of elements, or other objects. Now, those properties-objects, can have properties of their own, hence the need for hierarchical structure of JSON. After the file has been read from disk, we run the then function with the response as a parameter.

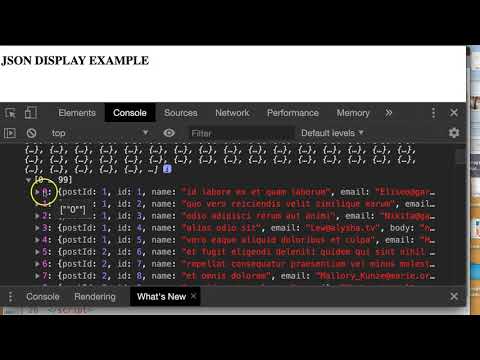

To get the JSON data from the response, we execute the json() function. Because the fs module is built in, you don't need to install it. It provides functions that you can use to read and write data in JSON format, and much more. We can use this knowledge to create a reusable helper function to read and parse a JSON file. Here we create a function called jsonReader that will read and parse a JSON file for us. It takes the path to the file and a callback to receive the parsed object and any errors.

It will catch any errors thrown by JSON.parse for us. Using the jsonString from reading customer.json, we create an object, and can access the address property. If JSON.parse throws an error, we handle it in the catch block. To load the data from customer.json file, we will use fs.readFile, passing it the path to our file, an optional encoding type, and a callback to receive the file data. But reading JSON files with require has its downsides.

The file will only be read once; requiring it again returns the cached data from the first time require was run. But for reading a file that changes on disk, like our customer.json might, we need to manually read the file using the asynchronous fs.readFile. For this reason, you generally want to use the async versions of fs functions in your code. We will focus on async operations, but will also show the synchronous equivalent. The fs module gives us the option of synchronous or asynchronous versions of many of its functions.

The synchronous versions block execution of other code until they are done accessing the filesystem, reading, or writing data. An async function will run without blocking other code. By using the JSON data type, you can ingest semi-structured JSON into BigQuery without providing a schema for the JSON data upfront. This lets you store and query data that doesn't always adhere to fixed schemas and data types. By ingesting JSON data as a JSON data type, BigQuery can encode and process each JSON field individually. You can then query the values of fields and array elements within the JSON data by using the field access operator, which makes JSON queries easy to use and cost efficient.

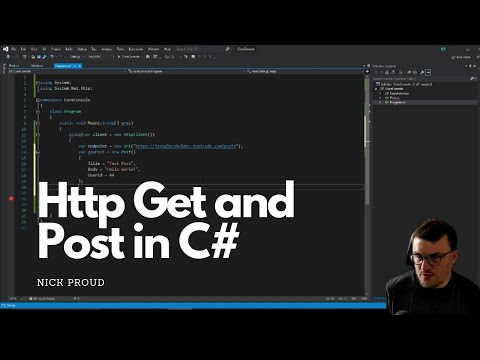

Launch.json supports defining values that depend on the operating system where the debugger is running. To do so, put a platform-specific literal into the launch.json file and specify the corresponding properties inside that literal. The connector configuration requires a PostgreSQL connection URL, user and password, the topic to read from and the table name to write to.

The schema is used to auto-create a table with the required columns and types if one doesn't exist, and then the payload values are just inserted into the named columns. Remember that you need to run a separate connector for every station ID that you want to collect data from. In the news recently was Instaclustr's acquisition of Credativ, experts in the open source PostgreSQL database . As a result, Instaclustr has managed PostgreSQL on its roadmap, and I was lucky to get access to the internal preview a few weeks ago. So you can see there are a number of ways to send data to the server and have the model binder automatically create the correct method parameter for you.

Some require explcit configuration, while others you get for free. For example Route values and querystring parameters are always bound, and for complex types (i.e. not primitives like string or int) the body is also bound. In JavaScript, we can use the require() method to load files and modules.

This takes the path of the local file where it has been saved. With the help of the console.log() function, it loads the data in the server and displays it. The cbimport command supports files that have a JSON document on each line, files that contain a JSON list where each element is a document, and the Couchbase Samples format. The file format can be specified with the --format flag. See the DATASET FORMATS section below for more details on the supported file formats.

Am4core.createFromConfig()function will return a fully valid chart object, which you can then manipulate and change as with theobject-based approach. You can update its or its children's properties, even add new elements. Upon examining ColorSet properties, we want to set a hard-coded list of colors for our PieChart Slices. That's a property named listwhich is an array of Color objects.

The url parameter used in the fetch function is where we get the JSON data. We don't have to drill down to any directory since the json file is in the same directory as our index.html. Statement of your component before rendering or loading this data. The GET call for your JSON resource is made when the component mounts on the DOM. However, since it is an asynchronous task your return statement is executed before the API call is made.

Because you are updating the state after fetching the required data, a re-rendering of the component updates the DOM with JSON data stored inside the state. The JSON used here is an array of objects, so the relevant check would be to check if the state exists and consequently verify if it has a non-zero length as shown below. If the value already exists and is of a matching non-value type, the json_set function overwrites the value by default.

For example, you can overwrite a number with a string, Boolean, null, and so on. The Node runtime environment has the built-in fs module you can use to work with files in general. The fs module has methods that you can use to read and write to JSON files using the callback API, promise-based API, or synchronous API. Node doesn't have a built-in function for appending or updating fields of an existing JSON file out of the box. You can, however, read the JSON file using the readFile method of the fs module, update it, and overwrite the JSON file with the updated JSON.

First, to write data to a JSON file, we must create a JSON string of the data with JSON.stringify. This returns a JSON string representation of a JavaScript object, which can be written to a file. Similar to parsing data into an object when reading a file, we must turn our data into a string to be able to write it to a file.

Before we can use the data from the callback in our code, we must turn it into an object. JSON.parse takes JSON data as input and returns a new JavaScript object. Otherwise, we would just have a string of data with properties we can't access. The easiest way to get data from an API is with fetch, which includes the .json() method to parse JSON responses into a usable JavaScript object literal or array automagically.

Jane-profile.jsonThe main difference between a JSON object and a regular JavaScript object – also called an object literal – comes down to the quotation marks. All the keys and string type values in a JSON object have to be wrapped in double quotation marks ("). The bq command-line client provides a number of features for interacting with BigQuery such as loading, exporting, creating tables, and retrieving information about datasets. For a full list of documented features and usage, review Google reference for Command-line tool reference. In terms of syntax, JavaScript objects are similar to JSON, but the keys in JavaScript objects are not strings in quotes. Also, JavaScript objects are less limited in terms of types passed to values, so they can use functions as values.

When you're working with JSON, you'll likely see JSON objects in a .json file, but they can also exist as a JSON object or string within the context of a program. JSON is a widely used format that allows for semi-structured data, because it does not require a schema. Applications can use a "schema-on-read" approach, where the application ingests the data and then queries based on assumptions about the schema of that data. This approach differs from the STRUCT type in BigQuery, which requires a fixed schema that is enforced for all values stored in a column of STRUCT type. The Response object, in turn, does not directly contain the actual JSON response body but is instead a representation of the entire HTTP response.

Here we are fetching a JSON file across the network and printing it to the console. The Promise returned from fetch() won't reject on HTTP error status even if the response is an HTTP 404 or 500. In some cases, you may need to configure additional options for the browser debug session--or use a different debugger entirely.

You can do this by setting action to startDebugging with a name property set to the name of the launch configuration to start when the pattern is matched. In addition, the debug status appears in the Status Bar showing the active debug configuration. By selecting the debug status, a user can change the active launch configuration and start debugging without needing to open the Run view. It is helpful to first create a sample Node.js application before reading about debugging.

You can follow the Node.js walkthrough to install Node.js and create a simple "Hello World" JavaScript application (app.js). Once you have a simple application set up, this page will take you through VS Code debugging features. The first thing is to decide how to connect to it for testing. There are a few options for client applications including psql (a terminal-based front-end), and a GUI such as pgAdmin4 . The presence of this extension will, by default, replace the output format configuration from the console configuration.

This means that the format string and the color settings will be ignored. The other console configuration items will continue to be applied. All possible properties are listed in the logging configuration reference. If your Angular application goes offline, reading the JSON file with HttpClient will fail. In this case, we have one more method to import local JSON files using the ES6+ import statement which supports importing JSON files.

Next, move the previous src/app/data.json file to the src/assets folder. I'm starting to learn D3 and it seems like a powerful framework. I can see data but nothing happen after loading data into D3 library. There are no error messages in the JavaScript console. The cbimport-json command is used to quickly import data from various files containing JSON data.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.